StandbyMP brings standardized, automated and scalable DR for PostgreSQL

PostgreSQL has continued to grow and gain popularity, now enjoying around 17% of the database market share. We are now seeing PostgreSQL adopted for increasingly complex projects and critical datasets. Scalability, extensibility, and cost-effectiveness make it an appealing choice for many DBAs. However, while the benefits are numerous, PostgreSQL is not without its challenges. These include complexity in setting up, managing and visualizing replication and high availability configurations and good support options if things go wrong.

Establishing good Disaster Recovery is foundational for any critical business database. The complexity of PostgreSQL means that while DR solutions can be created out-of-the-box, the process is complicated, and a high probability of human error exists.

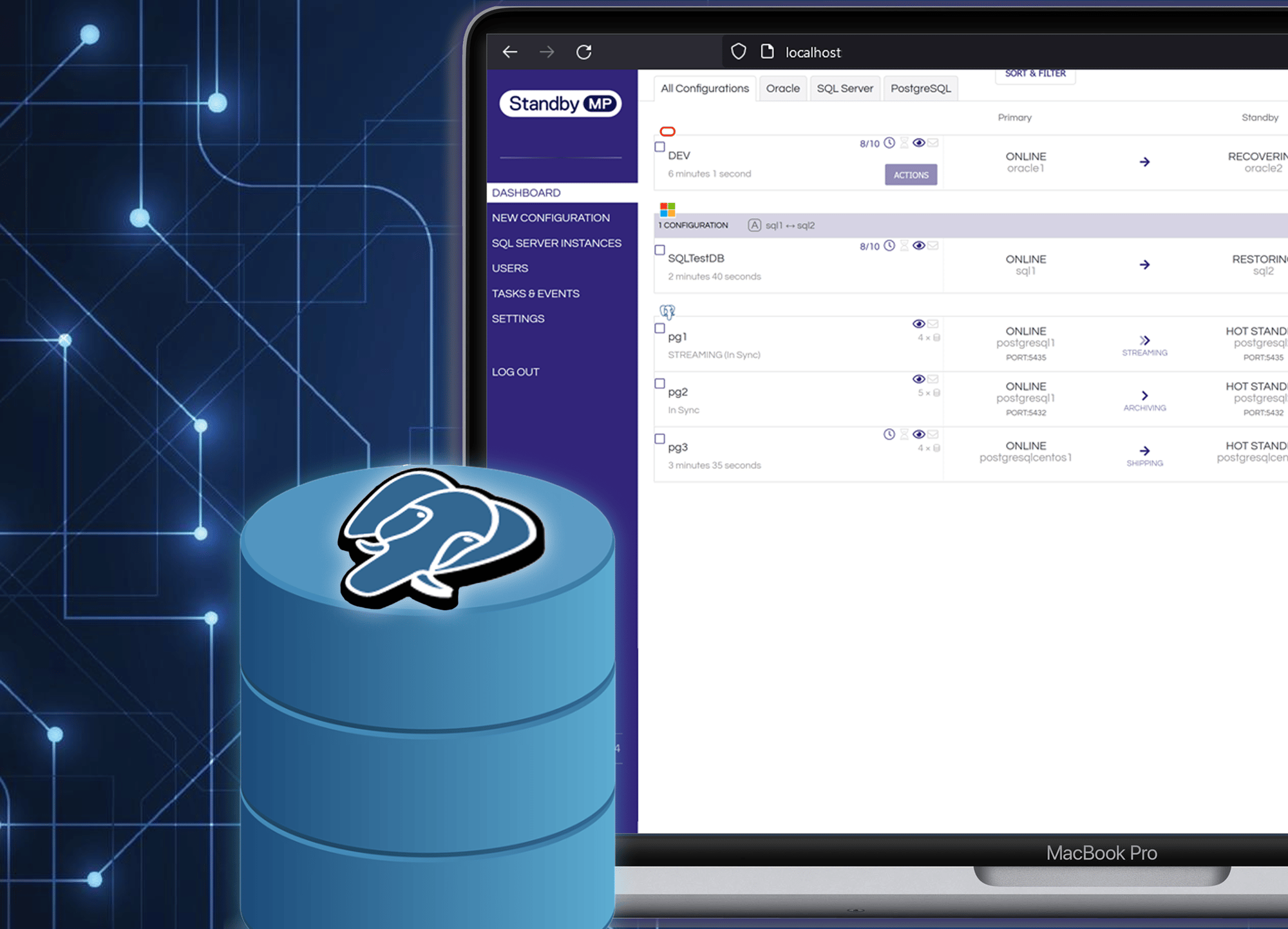

At Dbvisit, our singular focus is delivering Gold Standard Disaster Recovery software to enhance and simplify the creation and management of warm standby environments. With the launch of StandbyMP v11.5 for PostgreSQL, there is now a powerful, standardized way to create, manage, and monitor highly resilient standby clusters, with access to industry-leading 24/7 support for complete peace of mind.

Introducing StandbyMP v11.5

For the first time, you can manage Disaster Recovery for all your clusters from a single pane of glass view. Get full visibility of Oracle SE databases, Microsoft SQL Server instances, and now PostgreSQL clusters from a common interface. Manage DR setup, status notifications, and get access to a range of powerful features, automation and guided workflows that enable you to execute common DR tasks across multiple databases/clusters.

.png?width=1920&height=1080&name=image%20(16).png)

Setting up reliable Disaster Recovery for PostgreSQL requires much more than running a "setup streaming" command. In the real world, this would involve running separate actions using different command lines, manually editing configuration files, and matching everything up. If you make even a trivial mistake in a config file, you are on your own, and what's worse, you may not even know there is a problem until disaster strikes some time later.

StandbyMP delivers standardized, automated, verified standby setup across any supported PostgreSQL version and operating system.

.png?width=1920&height=1080&name=image%20(15).png)

Smart One-Click Actions

PostgreSQL works differently depending on the version and underlying operating system, with different names for useful utilities and parameters and different config file locations.

StandbyMP handles all this quietly and competently behind the scenes. Remove complexity and save time with pre-checks and intuitive workflows. Work smarter, not harder, with one-click, multi-cluster actions such as Creation, Switchover or Failover.

.png?width=1920&height=1080&name=image%20(17).png)

Easier Patching & Maintenance

Use One-Click Graceful Switchover to seamlessly shift database operations to a standby server during maintenance, with zero-data-loss for patching and maintenance. Once maintenance is complete, switch back to the primary server with just a few clicks, ensuring continuous availability and reducing disruption to critical services.

Graceful Switchovers make server maintenance and patching simple and risk-free.

.png?width=1920&height=1080&name=image%20(18).png)

How it works - 3 ways to manage PostgreSQL Disaster Recovery

Real-Time Data Streaming - Leverage PostgreSQL's powerful real-time replication functionality to keep a standby cluster continuously up-to-date. Easily import existing streaming configurations to gain compelling monitoring and maintenance functionality through StandbyMP.

Archive Mode WAL Shipping - Utilize time-tested and reliable Write-Ahead-Log files with PostgreSQL's Archive Mode settings to keep your standby cluster current.

Manual File Shipping - When you need total control, keep your standby cluster in sync by manually creating and restoring transactional backups on a set schedule.

Key features of StandbyMP include:

- Unique MultiPlatform architecture enables PostgreSQL, Oracle and SQL Server Disaster Recovery from one application.

- Highly intuitive GUI, where configurations can be viewed and managed from a single dashboard.

- Multi-database/cluster actions, even across database platforms.

- Real-time, distributed, event-driven communication.

- Graceful Switchovers enable effortless service maintenance and patching.

- Greater scalability with the ability to manage hundreds of database hosts and DR configurations.

Empower your database management with StandbyMP!

Head of Marketing

Subscribe to our monthly blog updates

By subscribing, you are agreeing to have your personal information managed in accordance with the terms of DBVisit's Privacy Policy